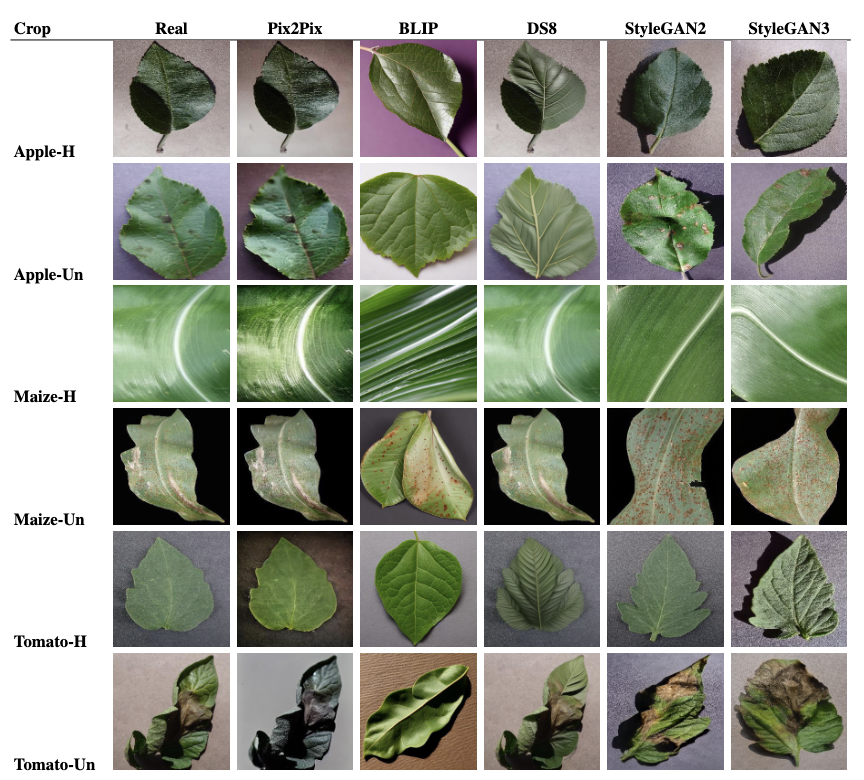

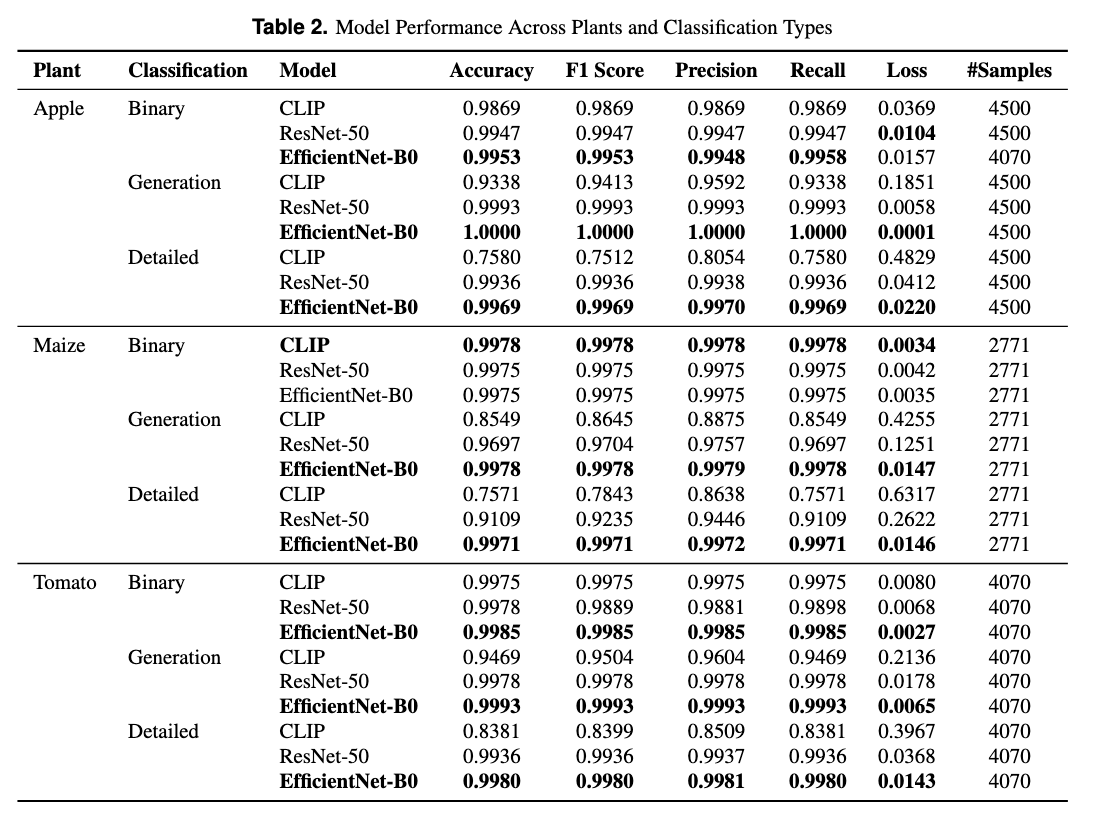

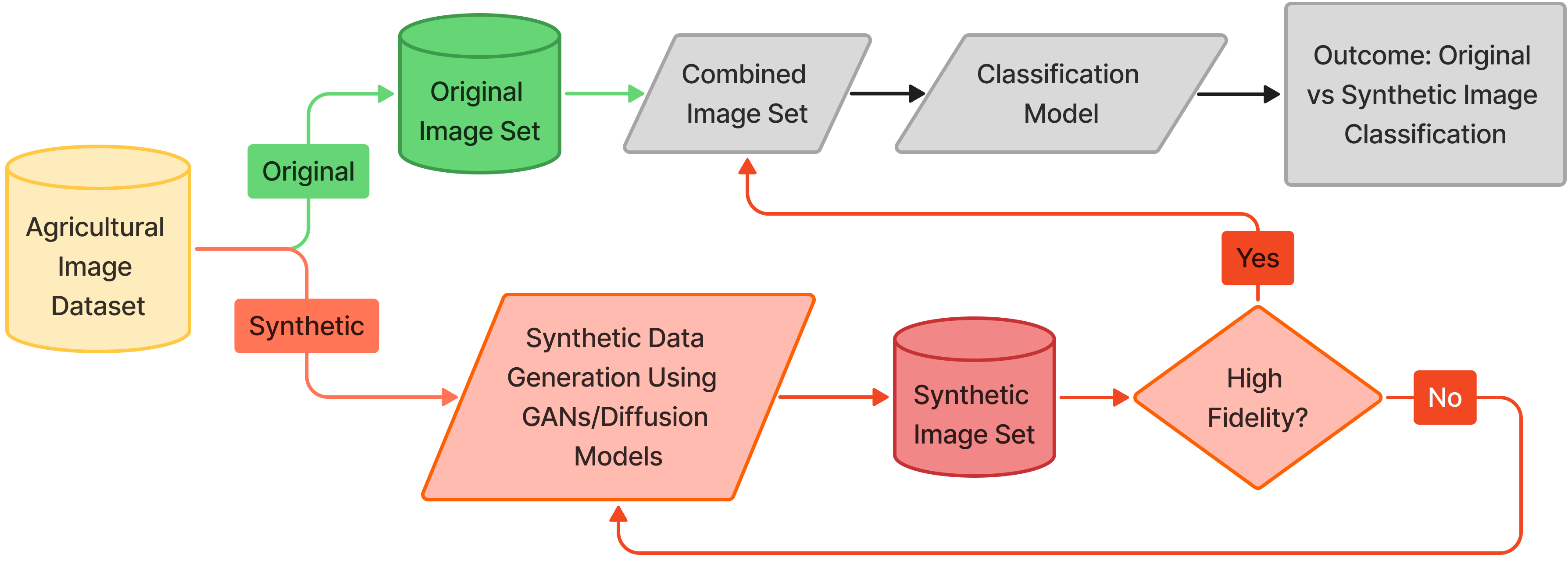

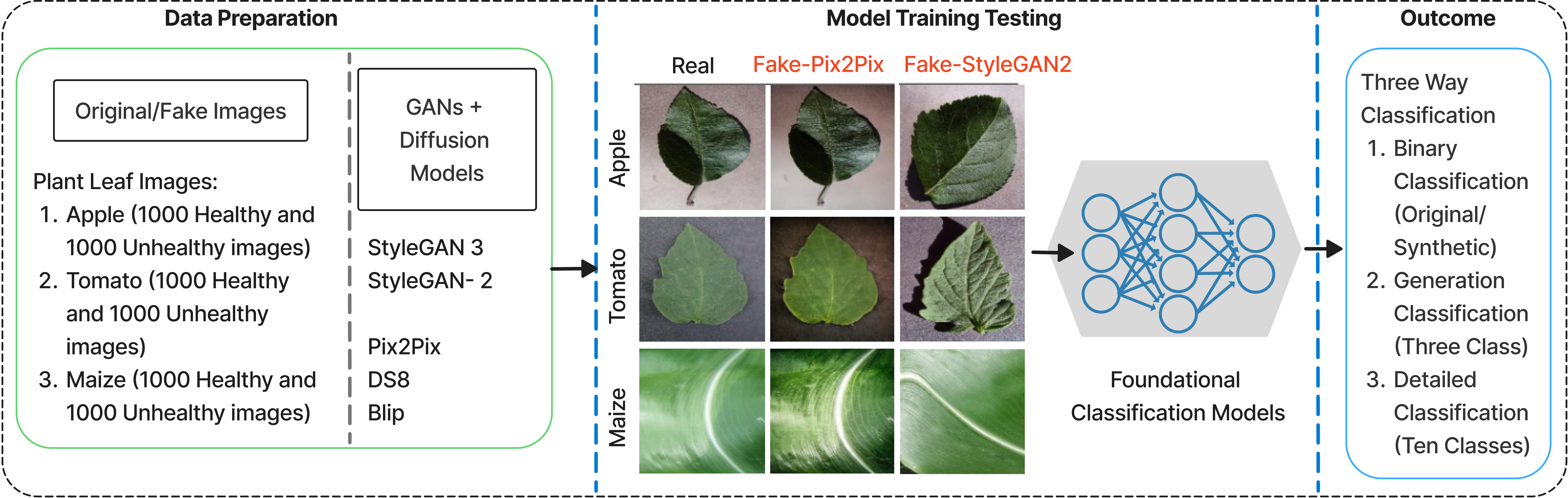

The gradual digitalization of agricultural systems through data-driven techniques has reshaped production growth. However, this transformation has also introduced new vulnerabilities, exposing these systems to cyber threats. While numerous domain-specific attack detection methods have been proposed, there is a lack of comprehensive cybersecurity frameworks tailored for agriculture, particularly as AI becomes increasingly integrated into these systems. To address this gap, we propose a novel framework capable of classifying high-fidelity adversarial plant images. This supervised approach not only detects attacks but also able to identify their specific source models. We employ state-of-the-art GAN architectures, including StyleGAN2 and StyleGAN3, alongside powerful diffusion models such as DS8, BLIP, and Pix2Pix, to produce diverse adversarial images via both image-to-image and text-to-image generation. These images are then used to train a classifier capable of distinguishing among all generation classes. Our experiments include comparative classification tasks, and logarithmic accuracy degradation with increasing class count. This demonstrates the scalability of the framework, allowing additional computer vision tasks to be incorporated without compromising performance. As GAN and diffusion models continue to advance, our framework is designed to evolve, ensuring its generation and detection capabilities remain robust against emerging threats.

Overview

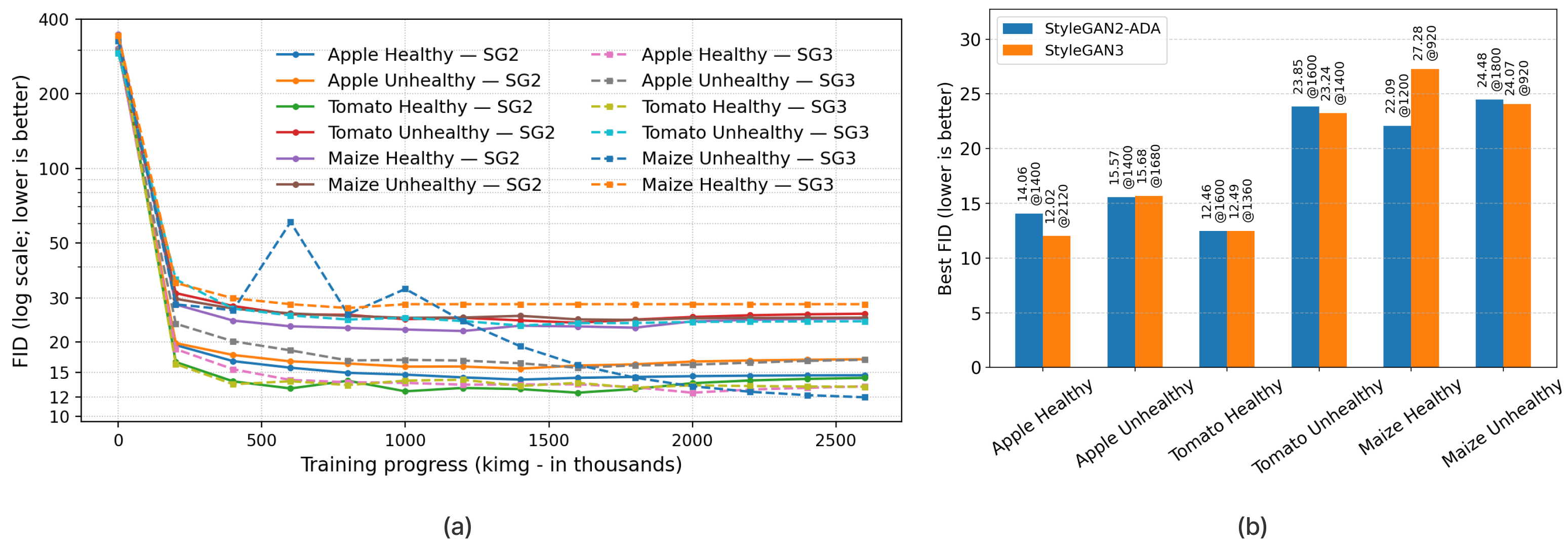

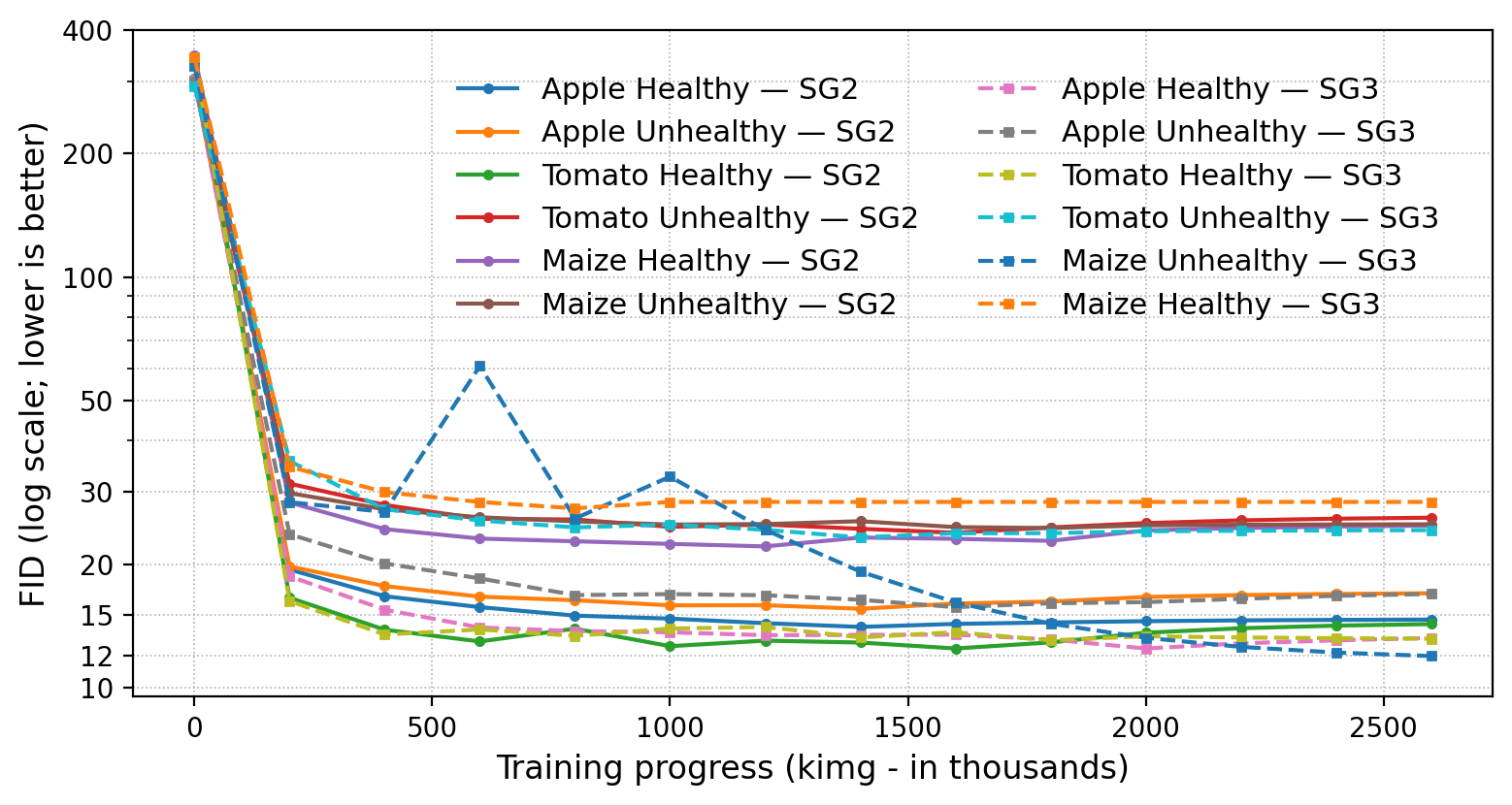

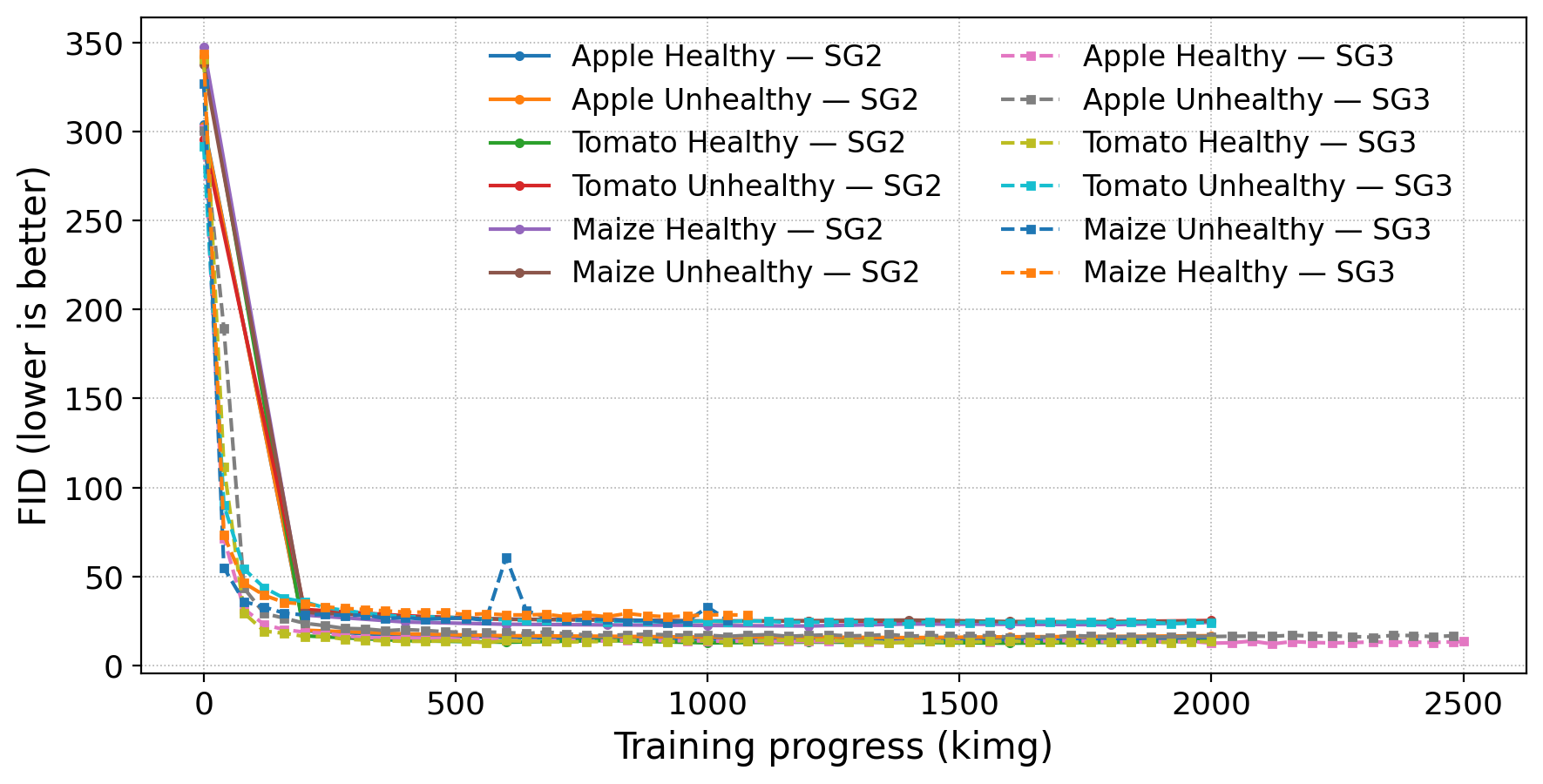

We target cyber-biosecurity risks in Agriculture 4.0 by detecting and attributing synthetic plant images introduced by adversaries. Our framework generates high-fidelity fakes with GANs (StyleGAN2/3) and diffusion pipelines (Pix2Pix, BLIP, DS8-inpainting), then trains a classifier to perform: (i) binary health detection, (ii) 3-way source detection (Real / GAN / Diffusion), and (iii) detailed 10-way crop–health–generator attribution.

Left: simplified adversarial image generation → detection pipeline. Right: framework overview across crops and generators.

StyleGAN models are trained per crop–health class to improve fidelity under limited data. Diffusion pipelines preserve scene layout while editing only leaf regions, producing subtle yet realistic perturbations. The downstream classifier (EfficientNet-B0 / ResNet-50 / CLIP-ViT) learns generator fingerprints in addition to crop and health cues.